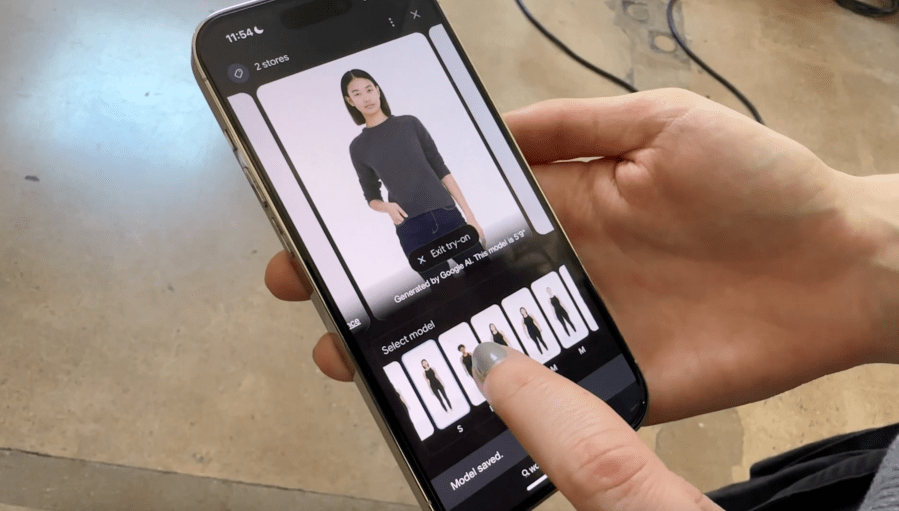

The next time you shop on Google, you might see a tag that says “Virtual try-on.”

This new AI feature lets you visualize what clothes might look like on a model that matches your body type.

I got to go behind the scenes in a Hollywood Studio where Google was creating the building blocks for the feature. Hundreds of photos of dozens of different models, all to feed the generative AI feature.

“We actually have over 80 different models of different skin tones, body shapes, ethnicities,” said Sean Scott, general manager of Google Shopping.

The shopping feature lets you see what an item of clothing would look like on a similar-looking model.

“So, how it drapes, how it stretches, how it wrinkles, how it folds,” said Scott.

To create the effect, Google is using AI to blend two images: one of the clothing item and another of the model you choose. They might not actually be wearing the item, but it looks that way.

“We use generative AI to diffuse those two images basically together and create a new image of what the garment looks like from the original model onto what it would look like on a model that’s of your shape and size,” explained Scott.

The hope? A better online shopping experience with fewer returns.

So far, it seems to be working. Google says when users see the try-on feature, they click it more often and look at the clothes on about four different models.

“I don’t think there’s anything worse than when you get a product, get a new shirt or a new sweater and you put it on and it’s like it doesn’t fit or doesn’t look quite the way you thought it would or hope it would. Then you have to return it. So that’s a pretty big letdown from shopping. And so we’re hoping to fix some of that,” concluded Scott.

This is just one way AI is changing the way we shop. Amazon now shows AI-powered summaries of customer reviews, while TripAdvisor and Yelp are using AI summaries to help us know what to expect from restaurants, hotels, and more.